MealMeter: Using Multimodal Sensing and Machine Learning for Automatically Estimating Nutrition Intake

Apr 7, 2025·

,

,

,

,

·

0 min read

Asiful Arefeen

Samantha Fessler

Sayyed Mostafa Mostafavi

Carol Johnston

Hassan Ghasemzadeh

Abstract

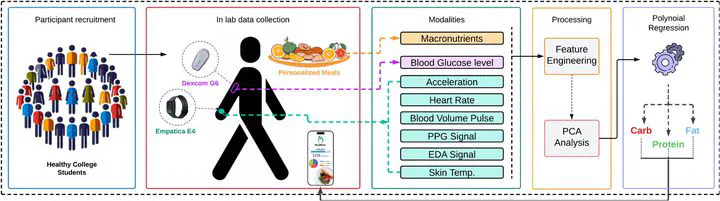

Accurate estimation of meal macronutrient composition is a pre-perquisite for precision nutrition, metabolic health monitoring, and glycemic management. Traditional dietary assessment methods, such as self-reported food logs or diet recalls are time-intensive and prone to inaccuracies and biases. Several existing AI-driven frameworks are data intensive. In this study, we propose MealMeter, a machine learning driven method that leverages multimodal sensor data of wearable and mobile devices. Data are collected from $12$ participants to estimate macronutrient intake. Our approach integrates physiological signals (e.g., continuous glucose, heart rate variability), inertial motion data, and environmental cues to model the relationship between meal intake and metabolic responses. Using lightweight machine learning models trained on a diverse dataset of labeled meal events, MealMeter predicts the composition of carbohydrates, proteins, and fats with high accuracy. Our results demonstrate that multimodal sensing combined with machine learning significantly improves meal macronutrient estimation compared to the baselines including foundation model and achieves average mean absolute errors (MAE) and average root mean squared relative errors (RMSRE) as low as 13.2 grams and 0.37, respectively, for carbohydrates. Therefore, our developed system has the potential to automate meal tracking, enhance dietary interventions, and support personalized nutrition strategies for individuals managing metabolic disorders such as diabetes and obesity.

Type

Publication

The 47th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), July 14–17, 2025, Copenhagen, Denmark