Domain Knowledge Aided Explainable Artificial Intelligence for Intrusion Detection and Response

Abstract

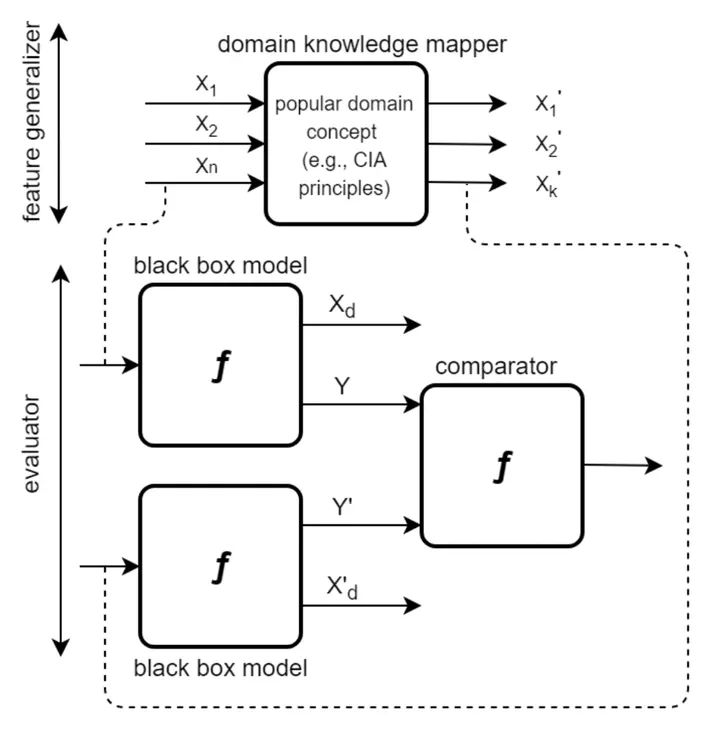

Artificial Intelligence (AI) has become an integral part of modern-day security solutions for its ability to learn very complex functions and handling “Big Data”. However, the lack of explainability and interpretability of successful AI models is a key stumbling block when trust in a model’s prediction is critical. This leads to human intervention, which in turn results in a delayed response or decision. While there have been major advancements in the speed and performance of AI-based intrusion detection systems, the response is still at human speed when it comes to explaining and interpreting a specific prediction or decision. In this work, we infuse popular domain knowledge (i.e., CIA principles) in our model for better explainability and validate the approach on a network intrusion detection test case. Our experimental results suggest that the infusion of domain knowledge provides better explainability as well as a faster decision or response. In addition, the infused domain knowledge generalizes the model to work well with unknown attacks, as well as opens the path to adapt to a large stream of network traffic from numerous IoT devices.

Date

Feb 1, 2023 12:30 PM — 1:00 PM

Event

EMIL Spring'23 Seminars

Location

Online (Zoom)